Architecting Resilient Node.js Microservices with Worker Threads

Node.js has revolutionized web development with its event-driven, non-blocking architecture, but even this powerful platform has limitations when confronted with CPU-intensive tasks. In this post, we'll explore how worker threads can prevent your Node.js applications from becoming unresponsive during heavy computations, particularly in containerized environments where performance issues can quickly cascade into infrastructure failures.

Why Node.js Architecture Makes Threading Different

Node.js follows a fundamentally different concurrency model than traditional platforms like Java, Python, or Go. To understand why worker threads are so important, we need to first understand what makes Node.js unique.

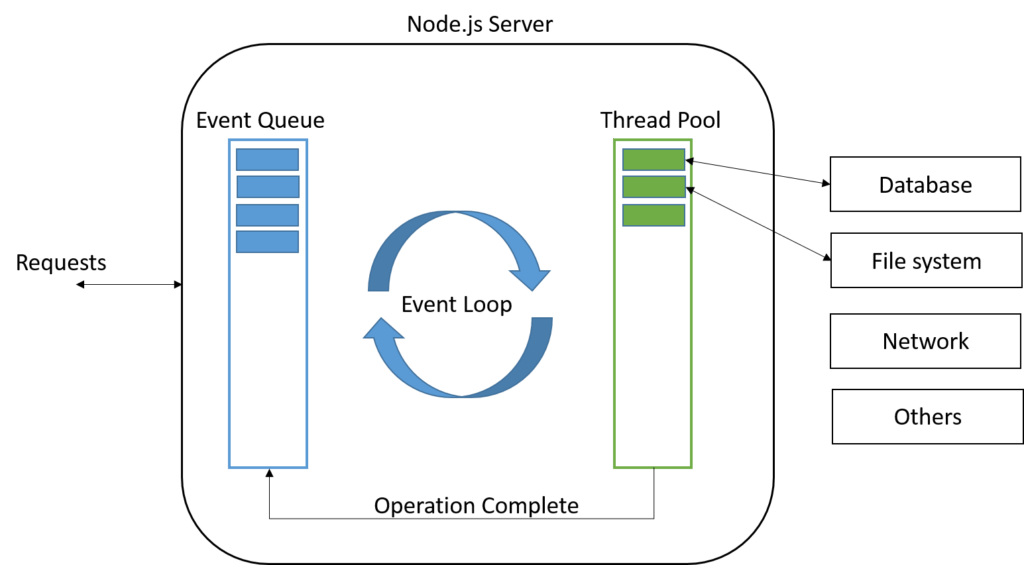

Unlike multi-threaded server environments, Node.js operates on a single thread with an event loop at its core. This architecture was intentionally designed to handle I/O-bound operations efficiently without the overhead of thread management.

When a Node.js application runs:

- The event loop processes operations in phases (timers, callbacks, polling, etc.)

- Asynchronous I/O operations are offloaded to the system kernel

- The event loop continues to handle other requests while waiting for I/O to complete

- When an operation finishes, its callback gets scheduled in the appropriate queue

- The event loop executes these callbacks in sequential order

This architecture excels at handling concurrent connections and I/O operations, making Node.js perfect for network applications. However, it falls short when performing CPU-intensive tasks.

In contrast, languages like Java, Python, and Go typically handle web requests using multiple threads or processes:

- Java: Uses thread pools where each request can be processed on a separate thread

- Python: Uses processes or threads (depending on the web server) to handle concurrent requests

- Go: Uses lightweight goroutines to efficiently handle concurrency

In these environments, if one request involves heavy computation, it only blocks that specific thread or goroutine, while others continue functioning normally.

The Event Loop Problem: When Node.js Gets Stuck

The event loop is the heart of Node.js that enables asynchronous programming. It consists of several phases that execute in a specific sequence:

- Timers phase: Executes callbacks scheduled by

setTimeout()andsetInterval() - Pending callbacks phase: Executes I/O callbacks deferred to the next loop iteration

- Idle/prepare phase: Used internally by Node.js

- Poll phase: Retrieves new I/O events and executes I/O-related callbacks

- Check phase: Executes callbacks scheduled by

setImmediate() - Close callbacks phase: Executes close event callbacks

The core problem with Node.js’s single-threaded architecture becomes evident when a CPU-intensive operation takes over the event loop. Unlike asynchronous I/O tasks that can be offloaded, such operations monopolize the thread, leaving the event loop unable to process anything else until the computation is finished. During this period of blockage, the server essentially grinds to a halt: new HTTP requests pile up unanswered, timers fail to execute, ongoing I/O operations remain incomplete, and even critical health checks from Kubernetes or load balancers go unacknowledged.

This bottleneck doesn’t just affect the immediate request—it sets off a chain reaction. In containerized environments, where responsiveness is key to maintaining stability, this kind of event loop congestion can cascade into widespread failures. Pods may be marked unhealthy due to missed health checks, load balancers might time out waiting for responses, and users are left facing frustrating delays or outright failures.

The Kubernetes Nightmare: When Your Pods Start Failing

In Kubernetes environments, the impact of a blocked main thread can be catastrophic, setting off a chain reaction that destabilizes your entire infrastructure. Imagine this scenario: A user sends a request to your Node.js application, but it involves CPU-intensive processing. As the main thread gets consumed by this computation, it becomes unresponsive to other tasks. Meanwhile, Kubernetes continues to send liveness and readiness probes to determine the health of the pod. Since the Node.js process is stuck handling the heavy computation, it fails to respond to these probes in time.

As the probe timeouts accumulate, Kubernetes marks the pod as unhealthy and initiates a restart. But this doesn’t solve the underlying issue—each restart only resets the cycle. To make matters worse, load balancers waiting for responses from the pod also time out, further exacerbating the problem. What started as a single compute-heavy request now spirals into a cascading failure: users experience API timeouts, pods repeatedly restart, and your system’s overall stability begins to crumble under pressure.

This scenario highlights how a seemingly small architectural oversight—blocking the Node.js event loop—can snowball into widespread infrastructure degradation in containerized environments.

Worker Threads: The Solution to CPU-Intensive Tasks

When Node.js introduced the worker_threads module (version 10.5.0 and stable since v12), it marked a significant step forward in addressing one of the platform’s most persistent limitations: its inability to handle CPU-intensive tasks without blocking the main thread. Worker threads provide a way to execute JavaScript code in parallel, enabling true multithreading capabilities within a single Node.js process. This innovation allows developers to offload heavy computations to separate threads, ensuring that the main thread remains free to handle I/O operations and respond to incoming requests.

Unlike older concurrency mechanisms in Node.js, such as child_process or cluster, worker threads are designed specifically for tasks that demand high computational power. While child processes create entirely separate instances of the Node.js runtime, worker threads operate within the same process, allowing them to share memory efficiently using tools like SharedArrayBuffer. This design makes worker threads lighter and faster, with lower overhead compared to process-based solutions.

Worker threads also come with their own isolated memory space, which ensures that parallel execution is safe and avoids issues related to shared state. Communication between the main thread and workers is facilitated through a simple messaging system using postMessage and onmessage. This allows data to be passed back and forth seamlessly while maintaining thread isolation.

When you create a worker thread in Node.js, it runs in parallel with the main thread but operates on its own event loop. This means that while the worker handles its assigned task—be it a computation or data transformation—the main thread continues executing other parts of your application. The two communicate via an event-based messaging system.

Node.js Worker Threads vs. Python and Java Threads

Java is inherently multi-threaded, offering native support for threads through its Thread class and Runnable interface. Each thread in Java operates independently, sharing memory by default, which enables seamless interaction between threads but also introduces risks like race conditions. Java threads are tightly integrated with the JVM and the operating system, allowing developers to leverage multi-core processors efficiently for tasks such as concurrent calculations or handling multiple I/O operations simultaneously.

In contrast, Node.js worker threads are not “true threads” in the conventional sense. They run isolated instances of the V8 JavaScript runtime, meaning memory updates in one thread are not visible to others unless explicitly shared using mechanisms like SharedArrayBuffer. Communication between the main thread and workers relies on an event-based messaging system (postMessage), which adds a layer of abstraction but ensures thread safety. While Java threads excel at shared-state concurrency, Node.js worker threads prioritize isolation and simplicity, making them ideal for offloading CPU-bound tasks without blocking the event loop.

Python threading operates within a single memory heap, allowing all threads to access shared variables and data structures. However, Python’s Global Interpreter Lock (GIL) restricts true parallel execution of threads within a single process, making threading more suitable for I/O-bound tasks rather than CPU-intensive operations. Python developers often turn to multiprocessing for parallelism in compute-heavy scenarios. Node.js worker threads, on the other hand, bypass similar limitations by creating entirely independent execution contexts for each thread. This approach avoids contention issues like those caused by Python’s GIL but sacrifices direct memory sharing between threads. Instead, developers use message-passing or shared memory buffers to transfer data between the main thread and workers. While Python threading is often constrained by its interpreter, Node.js worker threads leverage V8’s capabilities to achieve efficient parallel processing for computationally intensive workloads.

Real-World Implementation: Worker Threads in Action

Let's examine a practical implementation of worker threads using the provided code examples. What we are tryng to implement here is a nodejs server offloading some compute heavy operations to a worker thread. There are two files, server.js (for running the server) and worker.js (for the worker thread).

Main Server Implementation (server.js)

const http = require('http');

const { Worker } = require('worker_threads');

const url = require('url');

const hostname = '127.0.0.1';

const port = 3000;

// Function to offload work to a worker thread

function runWorker(input) {

return new Promise((resolve, reject) => {

const worker = new Worker('./worker.js', { workerData: input }); // Pass input to the worker

worker.on('message', resolve);

worker.on('error', reject);

worker.on('exit', (code) => {

if (code !== 0) reject(new Error(`Worker stopped with exit code ${code}`));

});

});

}

const server = http.createServer(async (req, res) => {

const parsedUrl = url.parse(req.url, true); // Parse the URL

const query = parsedUrl.query; // Get query parameters

res.setHeader('Content-Type', 'text/plain');

if (parsedUrl.pathname === '/' && req.method === 'GET') {

res.statusCode = 200;

res.end('Root: 200 ok');

} else if (parsedUrl.pathname === '/test' && req.method === 'GET') {

try {

const input = query.input || 'default'; // Extract query parameter 'input'

res.statusCode = 200;

const result = await runWorker(input); // Pass query parameter to the worker

res.end(`Input: ${input}, Result: ${result}`);

} catch (err) {

res.statusCode = 500;

res.end(`Error: ${err.message}`);

}

} else {

res.statusCode = 404;

res.end('404 Not Found');

}

});

server.listen(port, hostname, () => {

console.log(`Server running at http://${hostname}:${port}/`);

});

Let's break down what's happening here:

- We create a simple HTTP server with two endpoints:

/and/test - The

/endpoint returns immediately, demonstrating a non-blocking response. Consider this for health checks or other normal APIs. - The

/testendpoint offloads work to a worker thread via therunWorkerfunction. It also passes query parameterinput's value to the worker thread. - The

runWorkerfunction:- Creates a new worker thread running the code in

worker.js - Passes input data to the worker

- Returns a Promise that resolves when the worker sends a message back

- Properly handles errors and worker exit codes

- Creates a new worker thread running the code in

This design keeps the main thread free to handle other requests even while the worker is performing its CPU-intensive task.

Worker Implementation (worker.js)

// Import worker_threads to communicate with the main thread

const { parentPort, workerData } = require('worker_threads');

// Simulate a long computation

function longCompute(workerData) {

console.log(`Inside worker. Worker data: ${workerData}`);

// start tracking the current time before the process

var start = new Date().getTime();

// Simulate a long running process

for(var i = 0; i < 6000000000; i++){

var x = i * i;

}

// get the end time and diff

var end = new Date().getTime();

var time = end - start;

// get the time in seconds

var seconds = time / 1000;

return seconds;

}

// Perform the computation and send the result back to the main thread

parentPort.postMessage(longCompute(workerData));

The worker thread:

- Receives input through the

workerDataparameter - Performs a CPU-intensive calculation (simulated with a long-running loop)

- Measures and returns the execution time in seconds

- Sends the result back to the main thread using

parentPort.postMessage()

The key benefit here is that while this computation runs, it happens in a separate thread, leaving the main thread free to handle other requests, respond to health checks, and maintain application responsiveness.

Best Practices for Worker Threads in Production

Based on real-world experience and the Node.js documentation, here are key best practices for implementing worker threads:

1. Use Worker Threads Only for CPU-Intensive Tasks

While worker threads are a powerful addition to Node.js, they come with their own trade-offs, including added complexity and overhead. For this reason, they should be used judiciously and only when absolutely necessary.

The ideal use cases for worker threads are scenarios where heavy computation risks blocking the main thread. For example, tasks like complex mathematical calculations or data processing that involve transforming large datasets are prime candidates. Similarly, image manipulation—such as resizing or applying filters—can be computationally expensive and benefit greatly from being offloaded to a worker thread. Parsing massive JSON files or running machine learning inference locally are other examples where worker threads shine, as these tasks can quickly overwhelm the single-threaded event loop if not handled properly.

By isolating these CPU-bound operations in separate threads, you can keep your main thread free to handle I/O tasks and respond to incoming requests, ensuring your application remains responsive and performant even under heavy workloads. However, it’s important to carefully evaluate whether a task truly warrants the use of worker threads or if it can be optimized in other ways before introducing the additional complexity they bring.

2. Implement a Worker Thread Pool

Creating and destroying worker threads has overhead, so for production applications, implement a pool of reusable worker threads:

class WorkerPool {

constructor(size, workerScript) {

this.size = size;

this.workerScript = workerScript;

this.workers = [];

this.freeWorkers = [];

for (let i = 0; i < size; i++) {

const worker = new Worker(workerScript);

this.workers.push(worker);

this.freeWorkers.push(worker);

}

}

runTask(data) {

return new Promise((resolve, reject) => {

if (this.freeWorkers.length === 0) {

reject(new Error('No free workers available'));

return;

}

const worker = this.freeWorkers.pop();

const messageHandler = (result) => {

worker.removeListener('message', messageHandler);

worker.removeListener('error', errorHandler);

this.freeWorkers.push(worker);

resolve(result);

};

const errorHandler = (error) => {

worker.removeListener('message', messageHandler);

worker.removeListener('error', errorHandler);

this.freeWorkers.push(worker);

reject(error);

};

worker.once('message', messageHandler);

worker.once('error', errorHandler);

worker.postMessage(data);

});

}

close() {

for (const worker of this.workers) {

worker.terminate();

}

}

}

3. Handle Communication Efficiently

When working with large datasets, optimize how you transfer data between the main thread and workers:

-

For large binary data, use

ArrayBufferwith the transfer list parameter to avoid copying data:const buffer = new ArrayBuffer(1024 * 1024); // 1MB buffer worker.postMessage({ data: buffer }, [buffer]); -

For data that needs to be accessed by both threads simultaneously, consider using

SharedArrayBuffer.

4. Implement Robust Error Handling

Worker threads can crash independently of the main thread. Implement proper error handling:

worker.on('error', (err) => {

console.error('Worker error:', err);

// Implement recovery strategy - perhaps create a new worker

});

worker.on('exit', (code) => {

if (code !== 0) {

console.error(`Worker stopped with exit code ${code}`);

// Handle unexpected termination

}

});

5. Monitor Worker Thread Performance

In production environments, monitor your worker threads to detect issues:

- Track CPU and memory usage per worker

- Monitor task execution times

- Set timeouts for worker operations to prevent runaway processes

Conclusion

Node.js's single-threaded event loop architecture is incredibly powerful for I/O-bound applications but has inherent limitations when dealing with CPU-intensive tasks. Worker threads provide an elegant solution to these limitations, allowing you to maintain the responsiveness of your Node.js applications even when performing heavy computations.

For developers transitioning from multi-threaded environments like Java, Python, or Go, understanding Node.js's unique concurrency model and properly implementing worker threads will help you build robust, production-ready applications that make the most of both worlds: the simplicity and efficiency of Node.js's event-driven model and the parallel processing capabilities of multi-threading.

By following the patterns and practices outlined in this guide, you can ensure your Node.js applications remain responsive in containerized environments like Kubernetes, successfully handling health checks and avoiding cascading failures caused by main thread blocking.

Remember that worker threads should be used judiciously - they're a powerful tool for specific scenarios, not a replacement for Node.js's core asynchronous patterns. When implemented correctly, they allow your Node.js applications to handle even the most demanding computational tasks without sacrificing responsiveness.